TL;DR

In the Linux Git repository:

hyperfine --export-markdown /tmp/tldr.md --warmup 10 'git ls-files' 'find' 'fd --no-ignore'

| Command | Mean [ms] | Min [ms] | Max [ms] | Relative |

|---|---|---|---|---|

git ls-files | 16.9 ± 0.5 | 16.3 | 18.2 | 1.00 |

find | 93.1 ± 0.7 | 92.4 | 95.7 | 5.52 ± 0.16 |

fd --no-ignore | 85.8 ± 7.5 | 81.1 | 111.3 | 5.08 ± 0.47 |

git ls-files is more than 5 times faster than both fd --no-ignore and find!

Introduction

In my editor I changed my mapping to open files from fd1 to git ls-files2 and I noticed it felt faster after the change. But that’s intriguing, given fd’s goal to be very fast. Git on the other hand is primarily a source code management system (SCM), it’s main business3 is not to help you list your files! Let’s run some benchmarks to make sure.

Benchmarks

Is git ls-files actually faster than fd or is that just an illusion? In our benchmark, we will use:

fd8.2.1git2.33.0find4.8.0hyperfine1.11.0

We run the benchmarks with disk-cache filled, we are not measuring the cold cache case. That’s because in your editor, you may use the commands mentioned multiple times and would benefit from cache. The results are similar for an in memory repo, which confirms cache filling.

Also, you work on those files, so they should be in cache to a degree. We also make sure to be on a quiet PC, with CPU power-saving deactivated. Furthermore, the CPU has 8 cores with hyper-threading, so fd uses 8 threads. Last but not least, unless otherwise noted, the files in the repo are only the ones committed, for instance, no build artifacts are present.

A Test Git Repository

We first need a Git repository. I’ve chosen to clone4 the Linux kernel repo because it is a fairly big one and a reference for Git performance measurements. This is important to ensure searches take a non-trivial amount of time: as hyperfine rightfully points out, short run times (less than 5 ms) are more difficult to accurately compare.

git clone --depth 1 --recursive ssh://[email protected]/torvalds/linux.git ~/ghq/github.com/torvalds/linux

cd ~/ghq/github.com/torvalds/linux

Choosing the commands

We want to evaluate git ls-files versus fd and find. However, getting exactly the same list of file is not a trivial task:

| Command | Output lines |

|---|---|

git ls-files | 72219 |

find | 77039 |

fd --no-ignore | 76705 |

fd --no-ignore --hidden | 77038 |

fd | 72363 |

After some more tries, it turns out that this command gives exactly5 the same output as git ls-files:

fd --no-ignore --hidden --exclude .git --type file --type symlink

It is a fairly complicated command, with various criteria on the files to print and that could translate to an unfair advantage to git ls-files. Consequently, we will also use the simpler examples in the table above.

Hyperfine

Hyperfine is a great tool to compare various commands: it has a colored and markdown output, attempts to detect outliers, tunes the number of run… Here is an asciinema6 showing its output7:

First Results

For our first benchmark, on an SSD with btrfs, with commit ad347abe4a… checked out, we run:

hyperfine --export-markdown /tmp/1.md --warmup 10 'git ls-files' \

'find' 'fd --no-ignore' 'fd --no-ignore --hidden' 'fd' \

'fd --no-ignore --hidden --exclude .git --type file --type symlink'

This yields the following results:

| Command | Mean [ms] | Min [ms] | Max [ms] | Relative |

|---|---|---|---|---|

git ls-files | 16.9 ± 0.6 | 16.3 | 19.2 | 1.00 |

find | 93.2 ± 0.5 | 92.5 | 94.8 | 5.50 ± 0.19 |

fd --no-ignore | 86.6 ± 7.8 | 80.5 | 115.7 | 5.11 ± 0.49 |

fd --no-ignore --hidden | 121.0 ± 6.2 | 112.3 | 132.3 | 7.14 ± 0.44 |

fd | 231.6 ± 22.3 | 200.8 | 272.5 | 13.68 ± 1.40 |

fd --no-ignore --hidden --exclude .git --type file --type symlink | 80.9 ± 5.0 | 77.5 | 95.3 | 4.78 ± 0.34 |

As mentioned in the TL;DR, git ls-files is at least 5 times faster than its closest competitor! Let’s find out why that is.

How Does Git Store Files in a Repository

To try to understand where this performance advantage of git ls-files comes from, let’s look into how files are stored in a repository. This is a quick overview, you can find more details about Git’s storage internals in this section of the Pro Git book.

Git Objects

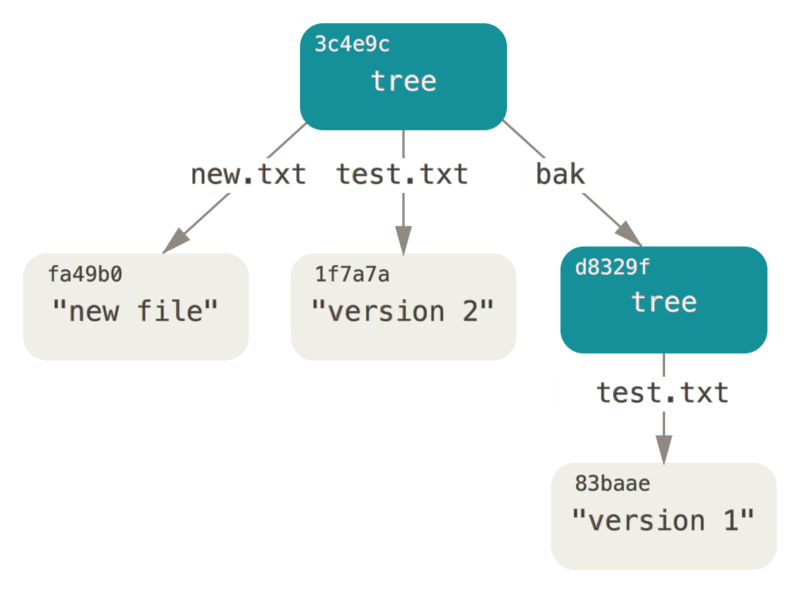

Git builds its own internal representation of the file system tree in the repository:

From the Pro Git book, written by Scott Chacon and Ben Straub and published by Apress, licensed under the Creative Commons Attribution Non Commercial Share Alike 3.0 license, copyright 2021.

In the figure above, each tree object contains a list of folder or names and references to these (among other things). This representation is then stored by its hash in the .git folder, like so:

.git/objects

├── 65

│ └── 107a3367b67e7a50788f575f73f70a1e61c1df

├── e6

│ └── 9de29bb2d1d6434b8b29ae775ad8c2e48c5391

├── f0

│ └── f1a67ce36d6d87e09ea711c62e88b135b60411

├── info

└── pack

As a result, to list the content of a folder, it seems Git has to access the corresponding tree object, stored in a file contained in a folder with the beginning of the hash. But doing that for the currently checked out files all the time would be slow, especially for frequently used commands like git status. Fortunately, git also maintains an index for files in the current working directory.

Git Index

This index, lists (among other things) each file in the repository with file-system metadata like last modification time. More details and examples are provided here.

So, it seems that the index has everything ls-files requires. Let’s check it is used by ls-files

Strace

Let’s ensure that ls-files uses only the index, without scanning many files in the repo or the .git folder. That would explain its performance advantage, as reading a file is cheaper than traversing many folders. To this end, we’ll use strace8 like so:

strace -e !write git ls-files>/dev/null 2>/tmp/a

It turns out the .git/index is read:

openat(AT_FDCWD, ".git/index", O_RDONLY) = 3

And we are not reading objects in the .git folder or files in the repository. A quick check of Git’s source code confirms this. We now have an explanation for the speed git ls-files displays in our benchmarks!

Other Scenarios

However, listing file in a fully committed repository is not the most common case when you work on your code: as you make changes, a larger portion of the files are changed or added. How does git ls-files compare in these other scenarios?

With Changes

When there are changes to some files, we shouldn’t see any significant performance difference: the index is still usable directly to get the names of the files in the repository, we don’t really care about whether their content changed.

To check this, let’s change all the C files in the kernel sources (using some fish shell scripting):

for f in (fd -e c)

echo 1 >> $f

end

git status | wc -l

28350

hyperfine --export-markdown /tmp/2.md --warmup 10 'git ls-files' 'find' 'fd --no-ignore' \

'fd --no-ignore --hidden --exclude .git --type file --type symlink'

| Command | Mean [ms] | Min [ms] | Max [ms] | Relative |

|---|---|---|---|---|

git ls-files | 16.8 ± 0.5 | 16.3 | 18.9 | 1.00 |

find | 93.5 ± 0.7 | 92.7 | 95.5 | 5.55 ± 0.17 |

fd --no-ignore | 86.1 ± 7.3 | 80.9 | 112.6 | 5.12 ± 0.46 |

fd --no-ignore --hidden --exclude .git --type file --type symlink | 80.8 ± 6.6 | 77.8 | 115.0 | 4.80 ± 0.42 |

We see the same numbers as before and it is again consistent with ls-files source code.

Run git checkout -f @ after this to remove the changes made to the files.

With New Files and -o

With yet uncommitted files, there are two subcases:

- files were created and added (with

git add): then the files are in index and reading the index is enough forls-files, like above, - files were created but not added: these files are not present in the index, but without the

-oflag,ls-fileswon’t output them either, so it can still use the index, as before.

So the only case that needs further investigations is the use of -o. Since we don’t have baseline results yet for -o, let’s first see how it compares without any unadded new files.

Without any Unadded New Files (Baseline)

When we haven’t added any new files in the repository:

hyperfine --export-markdown /tmp/3.md --warmup 10 'git ls-files' 'git ls-files -o' 'find' \

'fd --no-ignore' 'fd --no-ignore --hidden --exclude .git --type file --type symlink'

| Command | Mean [ms] | Min [ms] | Max [ms] | Relative |

|---|---|---|---|---|

git ls-files | 16.7 ± 0.5 | 16.1 | 17.9 | 1.00 |

git ls-files -o | 69.1 ± 0.7 | 67.8 | 70.8 | 4.12 ± 0.12 |

find | 94.3 ± 0.5 | 93.4 | 95.3 | 5.63 ± 0.16 |

fd --no-ignore | 86.6 ± 7.0 | 80.8 | 106.0 | 5.17 ± 0.44 |

fd --no-ignore --hidden --exclude .git --type file --type symlink | 80.8 ± 7.4 | 77.9 | 118.0 | 4.82 ± 0.46 |

That suggests that git ls-files -o is performing some more work besides “just” reading the index. With strace, we see lines like:

strace -e !write git ls-files -o>/dev/null 2>/tmp/a

…

openat(AT_FDCWD, "Documentation/", O_RDONLY|O_NONBLOCK|O_CLOEXEC|O_DIRECTORY) = 4

newfstatat(4, "", {st_mode=S_IFDIR|0755, st_size=1446, ...}, AT_EMPTY_PATH) = 0

getdents64(4, 0x55df0a6e6890 /* 99 entries */, 32768) = 3032

…

With Unadded New Files

Let’s add some files now:

for f in (seq 1 1000)

touch $f

end

And compare with our baseline:

hyperfine --export-markdown /tmp/4.md --warmup 10 'git ls-files' 'git ls-files -o' 'find' \

'fd --no-ignore' 'fd --no-ignore --hidden --exclude .git --type file --type symlink'

| Command | Mean [ms] | Min [ms] | Max [ms] | Relative |

|---|---|---|---|---|

git ls-files | 16.8 ± 0.5 | 16.1 | 18.0 | 1.00 |

git ls-files -o | 69.9 ± 1.2 | 68.1 | 72.6 | 4.17 ± 0.14 |

find | 94.5 ± 0.6 | 93.4 | 96.3 | 5.64 ± 0.17 |

fd --no-ignore | 86.8 ± 7.5 | 81.5 | 114.4 | 5.18 ± 0.48 |

fd --no-ignore --hidden --exclude .git --type file --type symlink | 81.0 ± 4.5 | 78.6 | 96.3 | 4.83 ± 0.31 |

There is little to no statically significant difference to our baseline, which highlights that much of the time is spent on things relatively independent of the number of files processed. It’s also worth noting that there is relatively little speed difference between git ls-files -o and fd --no-ignore --hidden --exclude .git --type file --type symlink.

Using strace, we can establish that all commands but git ls-files were reading all files in the repository. By comparing the strace outputs of git ls-files -o and fd --no-ignore --hidden --exclude .git --type file --type symlink (the two commands that print the same file list), we can see that they make similar system calls for each file in the repository. How to explain the (small) time difference between the two? I haven’t found convincing reasons in git source code for this case. It might be that the use of the index gives ls-files a head start.

Conclusions

I’m now using git ls-files in my keyboard driven text editor instead of fd or find. It is faster, although the perceived difference described in the Introduction is probably due to spikes in latency on a cold cache. The selection of files is also narrowed down with ls-files to the ones I care about. That’s said, I’ve still kept the fd-based file listing as a fallback, as sometimes I’m not in a Git repository.

After all, Git is already building an index, why not use it to speed up your jumping from file to file!

With Telescope.nvim

:Telescope find_files↩︎With Telescope.nvim

:Telescope git_files show_untracked=false↩︎That’s not to say git is slow, on the contrary, when one reads the release notes, it’s obvious that a lot of performance optimization work is done. ↩︎

Using a shallow clone makes it faster for you to reproduce results locally. However, running the benchmarks again on a full clone does not significantly change the results. ↩︎

Using the

diffcommand on the outputs ofgit ls-filesandfd --no-ignore --hidden --exclude .git --type file --type symlink↩︎This is inserted in this page using my asciinema hugo module ↩︎

This output has been edited to remove the warning about outliers. These warning appeared only with

asciinema, probably because it is disturbing the benchmark. This also explains why the values in this “asciicast” are different from the tables in the rest of the article: I’ve used values from runs outside asciinema for these tables. ↩︎See also https://jvns.ca/blog/2014/04/20/debug-your-programs-like-theyre-closed-source/ ↩︎

Liked this post? Subscribe:

Discussions

This blog does not host comments, but you can reply via email or participate in one of the discussions below:

Featured on: